Log Analytics Workspace Chaos: How We Tamed 100+ Orphaned Workspaces

The Problem That Kept Me Up at Night

Let me paint you a picture. You’re managing an Azure environment, and over the years, different teams have spun up Log Analytics workspaces like they’re going out of style. Development team needs one? Boom, new workspace. QA wants their own? Sure, why not. That POC project from 2022? Yeah, it probably has three workspaces nobody remembers.

Fast forward to today, and you’re staring at over 100 Log Analytics workspaces across multiple subscriptions. Some are actively used, some are ghost towns, and honestly? You have no idea which is which. The monthly Azure bill keeps climbing, and management is asking questions you can’t answer:

- “Can we delete this workspace?”

- “What’s actually using it?”

- “How much is this costing us?”

- “Will anything break if we remove it?”

Sound familiar? This was my reality six months ago.

The “Just Delete It” Disaster

Here’s what happened when we tried the cowboy approach. A colleague decided to clean up what looked like an unused workspace. No data in the portal, no obvious activity, seemed safe enough.

Three hours later, we had:

- Two production alerts that stopped firing (hello, missed incidents!)

- An Application Insights of a web app resource that couldn’t send telemetry

- Three App Services with broken diagnostic settings

- One very angry DevOps Engineer

Turns out, the workspace wasn’t empty it just looked empty from a casual glance. The data was there, the dependencies were real, and we learned a very expensive lesson: you can’t audit Log Analytics workspaces with your eyeballs.

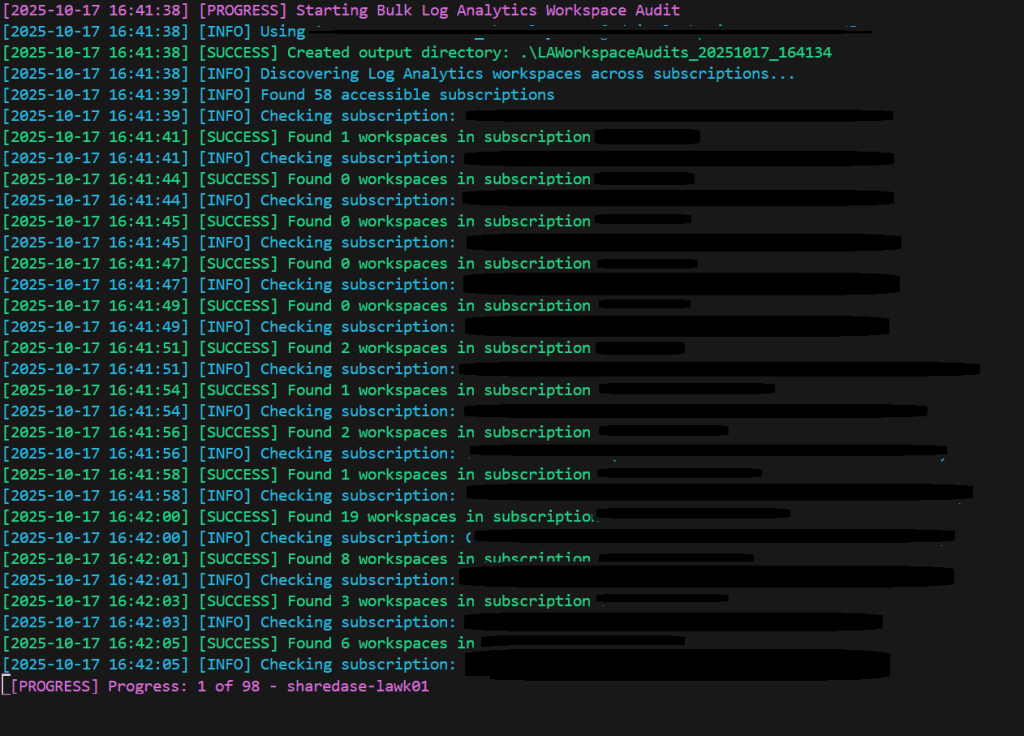

Enter the Bulk Audit Tool

After that incident, I spent a weekend building something (with the help of Ai) we desperately needed: an automated auditing tool that could answer all those questions before we touched anything. Not just for one workspace, but for all of them.

This PowerShell script became our safety net. It does what should be obvious but somehow isn’t it actually looks at what’s in each workspace, what’s connected to it, and what would break if you deleted it.

What This Tool Actually Does

Think of it as a full health check and dependency scanner rolled into one. For each workspace, it:

1. Discovers All Your Workspaces

Scans across all your subscriptions (or specific ones you target) and finds every Log Analytics workspace. No more “I didn’t know that existed” surprises.

.\BulkLAWorkspaceAudit.ps1 -AllWorkspaces -GenerateSummaryReport

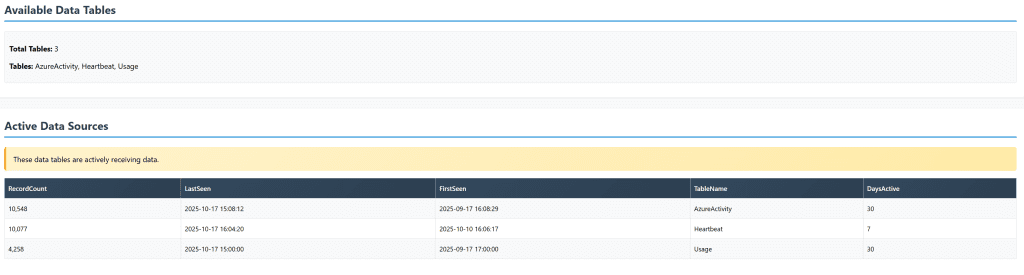

2. Analyzes Active Data Tables

It doesn’t just count tables it checks which ones are actually receiving data. That 90-day-old SecurityEvent table with zero records? The script knows it’s dead weight.

3. Identifies Application Insights Data

Automatically detects if you’re dealing with a workspace-based Application Insights resource. These need special handling, and the script flags them clearly.

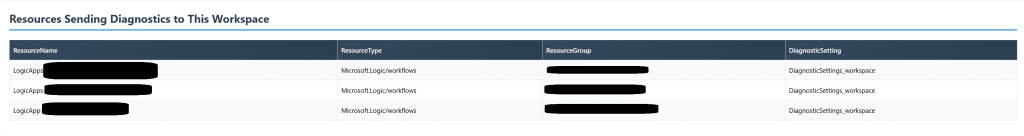

4. Maps Diagnostic Settings

This is the big one. It scans your Azure resources (App Services, Logic Apps, Functions, VMs, SQL Servers) and tells you which ones are sending diagnostics to each workspace. This is your “oh crap, this IS being used” detector.

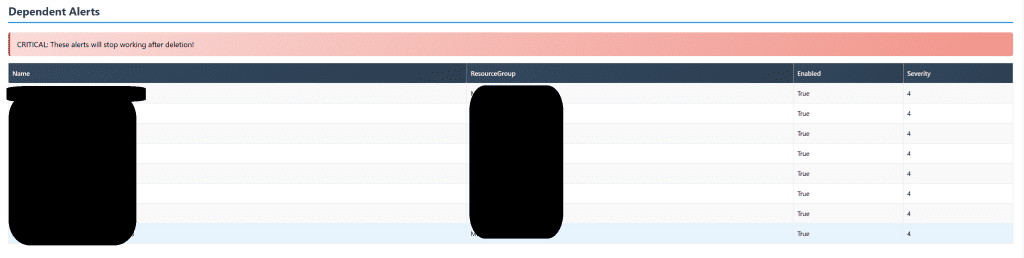

5. Finds Dependent Alerts

Checks for scheduled query alerts that rely on the workspace. Delete the workspace, and these alerts go silent. Not ideal for production monitoring.

6. Calculates Actual Costs

Pulls Cost Management data to show you what each workspace has cost over the last 30 days. Real euros, real budget impact. (not working for now , working on a fix)

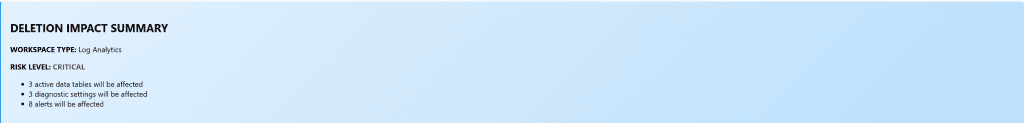

7. Assigns Risk Levels

Based on everything it finds, it gives you a simple risk rating:

- LOW: Empty or minimal usage, safe to delete

- MEDIUM: Has some data but no critical dependencies

- HIGH: Active diagnostic settings connected

- CRITICAL: Alerts would break, proceed with extreme caution

8. Generates Beautiful HTML Reports

Creates individual reports for each workspace AND a summary report showing your entire estate at a glance. No more spreadsheets, no more guesswork.

How to Use It

Quick Start – Audit Everything

The simplest approach audit all workspaces across all your subscriptions:

# Login first

Connect-AzAccount

# Audit all workspaces and generate a summary

.\BulkLAWorkspaceAudit.ps1 -AllWorkspaces -GenerateSummaryReport

This creates an output folder with individual reports for each workspace plus a master summary. Open the summary HTML in your browser, and you’ll immediately see which workspaces are high-risk vs. safe to delete.

Target Specific Subscriptions

If you only want to audit certain subscriptions (smart move for large enterprises):

.\BulkLAWorkspaceAudit.ps1 `

-AllWorkspaces `

-SubscriptionIds @("sub-id-1", "sub-id-2", "sub-id-3") `

-GenerateSummaryReport

Single Workspace Deep Dive

Need to investigate one specific workspace before making a decision?

.\BulkLAWorkspaceAudit.ps1 `

-WorkspaceName "production-logs" `

-ResourceGroupName "prod-monitoring-rg"

Adjust the Analysis Window

By default, it looks at the last 30 days. But maybe you want to be more aggressive:

# Only check last 7 days (more aggressive cleanup)

.\BulkLAWorkspaceAudit.ps1 -AllWorkspaces -DaysBack 7

# Or more conservative - check last 90 days

.\BulkLAWorkspaceAudit.ps1 -AllWorkspaces -DaysBack 90

Real-World Use Cases

Use Case 1: The Big Spring Cleaning

Scenario: Your CFO wants to cut cloud costs by 15%. You need to identify optimization opportunities fast.

Solution: Run the bulk audit with -AllWorkspaces. Sort the summary report by cost. You’ll immediately see:

- Expensive workspaces with LOW risk (easy wins!)

- Workspaces collecting data nobody looks at

- Duplicate workspaces serving the same purpose

In our case, we found 23 workspaces marked LOW or MEDIUM risk that were costing us €8,000/month. Easy decisions.

Use Case 2: Pre-Migration Assessment

Scenario: You’re consolidating multiple workspaces into a centralized logging strategy.

Solution: Audit all current workspaces to understand:

- Which ones have active Application Insights data (need special handling)

- What diagnostic settings will need to be reconfigured

- Which alerts need to be migrated to the new workspace

The script’s diagnostic settings mapping becomes your migration checklist.

Use Case 3: Compliance Audit

Scenario: Security team needs to know which workspaces contain what type of data and who’s using them.

Solution: Run the audit and export the data. The reports show:

- Table names (SecurityEvent, Syslog, etc. = security-relevant)

- Resource types sending data (helps identify data owners)

- Active vs. inactive workspaces (data retention compliance)

Use Case 4: Inheriting an Unknown Azure Environment

Scenario: You’re acquiring another company’s Azure environment. You need to understand their Log Analytics setup.

Solution: Get contributor access to their subscriptions, run the audit. Within hours, you know:

- Total workspace footprint

- Critical dependencies

- Cost implications

- Technical debt (orphaned workspaces)

The Results: Our Success Story

After implementing this tool, here’s what changed for us:

Before the audit:

- 127 Log Analytics workspaces

- ~€15,000/month in Log Analytics costs

- Zero visibility into usage

- Afraid to delete anything

After the audit + cleanup:

- 34 workspaces (73% reduction!)

- ~€4,200/month in costs (72% savings!)

- Complete documentation of what each workspace does

- Confident decision-making process

But the real win? No production incidents during cleanup. Every workspace we deleted was marked LOW risk, and we had the data to back up our decisions.

Pro Tips from the Trenches

1. Start with a Read-Only Run Your first audit is pure reconnaissance. Don’t delete anything yet. Just generate the reports and share them with the relevant teams. You’ll learn things about your environment you didn’t know.

2. Use the Risk Levels as Guidelines, Not Gospel A HIGH risk workspace isn’t necessarily “don’t touch it ever.” It means “talk to the teams first.” We’ve deleted HIGH risk workspaces after confirming with owners that the diagnostic settings were legacy cruft.

3. Schedule Regular Audits We run this quarterly now. New workspaces appear, old ones become obsolete. Make it part of your regular housekeeping.

4. Export the Data The HTML reports are great for humans, but keep the raw data. We pipe the results into our CMDB to track workspace lifecycle.

5. Cost Threshold Alerts We added a second pass: any workspace costing more than €200/month gets flagged for review, regardless of risk level. Sometimes expensive LOW-risk workspaces are still worth investigating.

What’s Next?

We’re continuously improving this tool. Current wishlist includes:

- Workspace-to-workspace data flow mapping

- Retention policy analysis and recommendations

- Automated deletion with approval workflows

- Integration with Azure DevOps for change tracking

But honestly? Even in its current form, this tool has paid for itself a hundred times over.

The Bottom Line

If you’re managing more than a handful of Log Analytics workspaces, you need systematic auditing. Manual reviews don’t scale, and “it looks unused” isn’t a strategy.

This tool isn’t fancy. It’s not AI-powered or blockchain-enabled (thank god). It’s just a well-crafted PowerShell script that does the boring, important work of actually checking what’s in your environment.

And sometimes, that’s exactly what you need.

LInk : achrafbenalaya.com/Log_Analytics_Workspace_report/BulkLAWorkspaceAudit.ps1 at main · achrafbenalaya/achrafbenalaya.com

the link for the script : Tap_Link_here

Got questions? Found this useful? I’d love to hear about your Log Analytics cleanup adventures. What worked? What disasters did you narrowly avoid? Drop a comment or reach out.

Want to contribute? The script is designed to be extended. If you add cool features (better cost analysis, workspace recommendations, etc.), share them back with the community.

Remember: In cloud cost management, knowledge is literally money. Every workspace you can confidently delete is savings you can track. And every CRITICAL workspace you DON’T delete accidentally is a disaster you avoided.

Happy auditing! 🔍